Fullcast has a robust Data Quality functionality that assesses data quality on three key dimensions. With this assessment, you can make informed decisions about what data points to use in your go-to-market strategy and territory creation. This article will delve into the core aspects of Fullcast's Data Quality settings, specifically focusing on data quality threshold settings, their functionality, and their benefits.

Data Quality

It’s true that sales and revenue operations teams often complain about data quality. But when building a GTM strategy, it is crucial to focus on the right data. Here’s a breakdown:

Focus on Account Data: While contact and opportunity data are important for day-to-day sales activities, account data is the foundation of your GTM strategy. This is because your market segmentation and territory planning rely heavily on accurate information about the companies you are targeting.

Prioritize Key Fields: Within your account data, focus on the specific fields that directly impact your GTM model. These are the fields used to segment your market, define ideal customer profiles, and build territories. By prioritizing these key fields, you can make data quality improvements more manageable.

Data Sources Matter:

CRM Data: Your CRM is the primary source of truth for account data. However, it's important to establish clear processes for data entry and maintenance to ensure accuracy.

Third-Party Data: Third-party data providers like Dun & Bradstreet and ZoomInfo can be helpful, but keep in mind:

Recency: This data can be outdated, so prioritize information from your sales team who interact directly with accounts.

Specialization: Global data providers may be less accurate outside the US or lack industry-specific data. Consider supplementing with specialized providers.

Fullcast's Role: Fullcast's data quality functionality helps you clean and optimize the data within your CRM. While we can offer best practices and suggest reputable data providers, we can't directly improve the quality of external data sources.

Key Takeaways:

Focus on account data and key fields for GTM strategy.

Prioritize your CRM as the source of truth and establish good data hygiene practices.

Be mindful of the limitations of third-party data.

Supplement global data providers with specialized providers for industry-specific needs.

Data Quality in Fullcast

Fullcast's Data Quality functionality enables users to set thresh/;h4

olds on acceptable data quality levels during the import process. This feature is particularly useful for fields used in creating territory hierarchies. Here's how it works:

Data Quality Dimensions and Thresholds

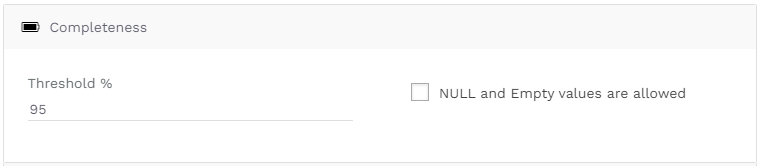

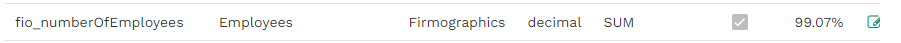

Completeness: This measures how "filled in" a field is across all records. For example, a completeness threshold of 95% means that 95% of the records must have this field filled in. Below is a screenshot we used for the Number of Employees field. Note that you can also determine whether to accept Null or Empty values. In this case, we require accurate employee counts as part of our market segmentation, so we are not allowing nulls.

Completeness thresholds for the Number of Employees field

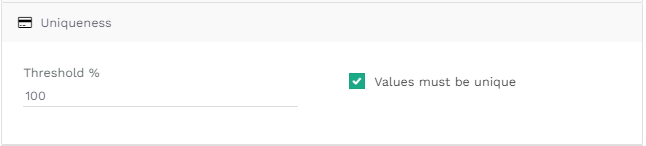

Uniqueness: This looks at every value in a given field to confirm it is unique. For instance, on a Company ID field, setting a uniqueness threshold of 100% ensures that every ID number is unique, which is crucial to avoid duplicates. Below is a screenshot we used for the Company ID field.

Uniqueness thresholds for the Company ID field

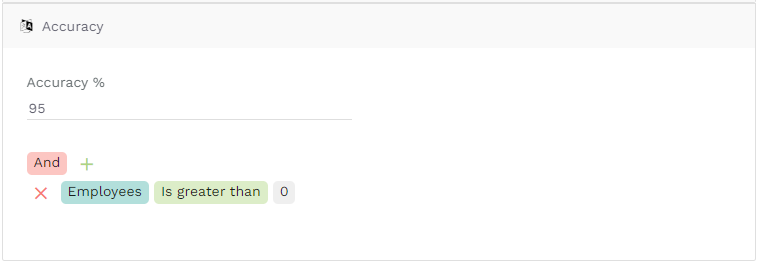

Accuracy (also referred to as "Validity"): This ensures that the values in a field are valid according to a predefined set of conditions. For example, you can set a rule that employee counts of 0 are not valid. Below is a screenshot we used for the Number of Employees field.

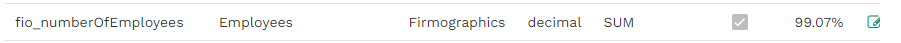

Data Quality Score

Based on the thresholds set for each of these dimensions, Fullcast will create an aggregate data quality score. The score represents the percentage of the records in your system which pass all three of your thresholds for Completeness, Uniqueness, and Accuracy. This score can be viewed in the Entities & Fields settings page. Below is a screenshot of the Number of Employees field, which has a Data Quality score of 99.07%.

In addition to the ability to set Data Quality thresholds on individual fields, Fullcast also has the ability to set up data quality thresholds on relationships between fields on different objects. For example, if you import both accounts and opportunities from Salesforce, you could potentially be importing opportunities that reference accounts that haven’t been imported into Fullcast. Fullcast's Data Quality functionality can be configured so that this situation doesn’t occur and all references across records are valid.

Data Quality Indicators

Data quality scores are represented using a color-coded tagging system:

Green: The data meets the threshold.

Yellow: The data is below the threshold but not significantly.

Red: The data does not meet the threshold.

To avoid undue concern about data quality, Fullcast limits the visibility of data quality scores to specific areas:

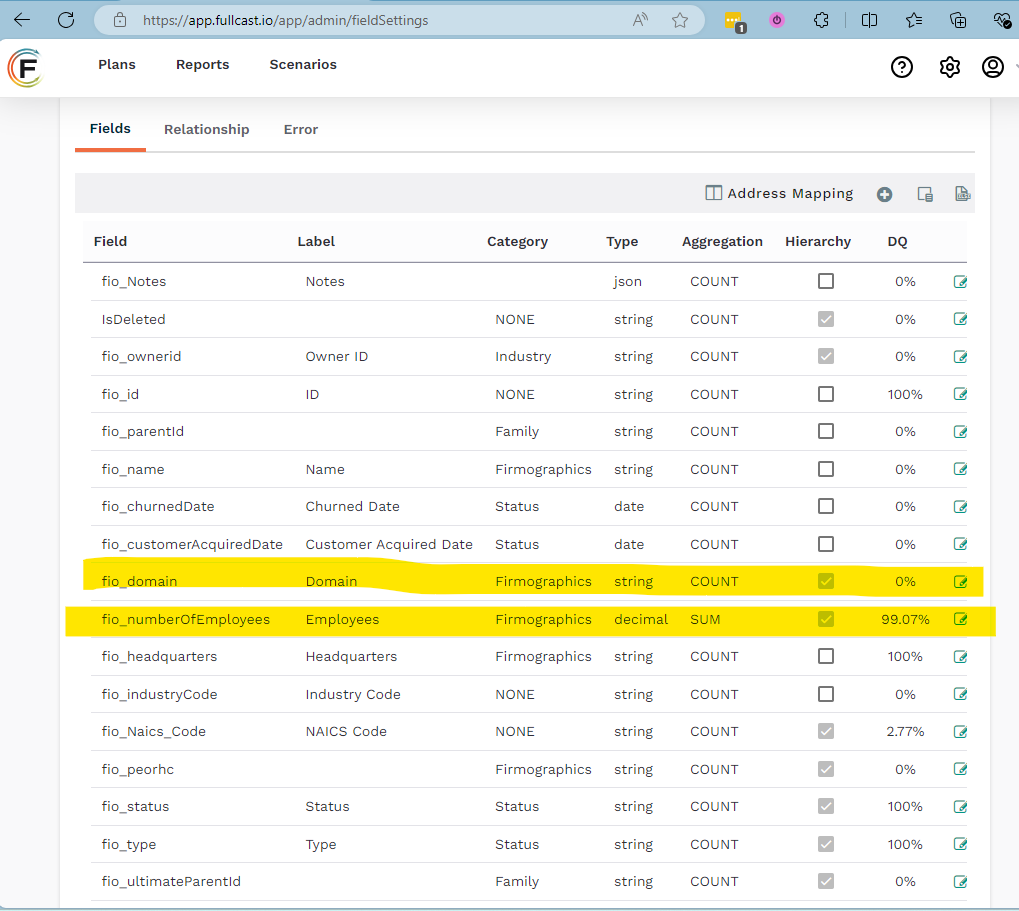

Entities & Fields Imports Settings: Users can see data quality indicators when configuring imports. See screenshot below.

In the Entities & Fields Settings, you can see the data quality score indicated in the column labeled DQ.

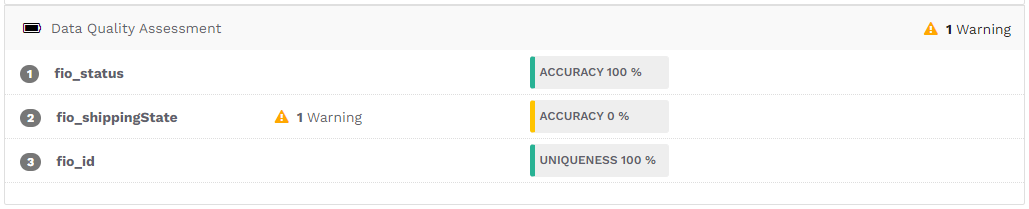

Segmentation Rule Creation: Data quality indicators are displayed for fields used in segmentation rules. For instance, if you're using employee counts to create segments (e.g., enterprise, mid-market, SMB), but the fill rate for employee counts is only 50%, many accounts will end up Unassigned due to missing data. In the screenshot below, you can see the data quality tag for each of the fields used to create the territory segmentation rules.

Data quality scores for Status, Shipping State, and ID fields

Data Pre-processing

As part of the standard import process, the following pre-processing is conducted.

Geographical Data: Fullcast compares address information to Mapbox (geographical data provider) to normalize inconsistencies.

Industry Data: NAICS codes are verified with the NAICS database to ensure validity.

Parent-Child Relationships: Parent-child relationships are validated to ensure no loops or errors.

Data Policies

As your RevOps team works to institute data governance to maintain a level of data quality and integrity, below are a few key points that we recommend.

Suggested Policy: Sources of Truth

Fullcast can provide customers with documentation of our suggested policies for sources of truth, particularly in cases where you source data from multiple providers. These policies help maintain consistency and reliability in data used for territory carving and other critical operations.

Fullcast Data Policies Overview

Account Families: Policies to set rules for accurate matching of parent and child accounts.

Account Dedupe: Policies to deal with duplicate accounts.

Industry Taxonomy: Preferences on industry values for territory carving, customizable based on business needs.

Benefits of Fullcast's Data Quality Functionality

Enhanced Decision-Making: By ensuring high data quality, businesses can make more informed decisions, leading to better outcomes.

Improved Operational Efficiency: Automating data quality checks and thresholds reduces manual data cleaning efforts, saving time and resources. Practically speaking, we have heard of teams feeling like they need a master data project to improve their Revops and GTM strategy. That is rarely the case. Focusing on the fields that matter and investing in assessing, improving, and maintaining data quality on those is not only a much better approach, but is less costly than a huge undertaking to clean up all your data.

Increased Trust in Data: With reliable and accurate data, stakeholders can have greater confidence in the information they use for strategic planning and daily operations.

Fullcast's Data Quality functionality is an essential tool for maintaining high standards of data integrity. By setting and monitoring data quality thresholds, businesses can ensure their data is complete, unique, and accurate, leading to better decision-making and operational efficiency. Implementing these data quality measures helps overcome common data challenges and establishes a robust foundation for successful revenue operations.